Mind Your Q&A’s

We perceive our environment using our senses, and we use this information to understand and respond to changing situations. Autonomous vehicles gather information about the surrounding environment but lack an efficient way to process and use it to make decisions. A team of researchers at the University of Michigan is working on a technique to bridge the communication gap between data collection and use, creating a new type of situational awareness for autonomous vehicles.

“As a human explores their environment, we observe a lot, but we have the capacity to synthesize this information,” said Rada Mihalcea, professor in Computer Science and Engineering at UMich and lead investigator on the project. “When it comes to machines, they go and observe and record what they see, which produces a huge amount of information that is daunting for humans to process.”

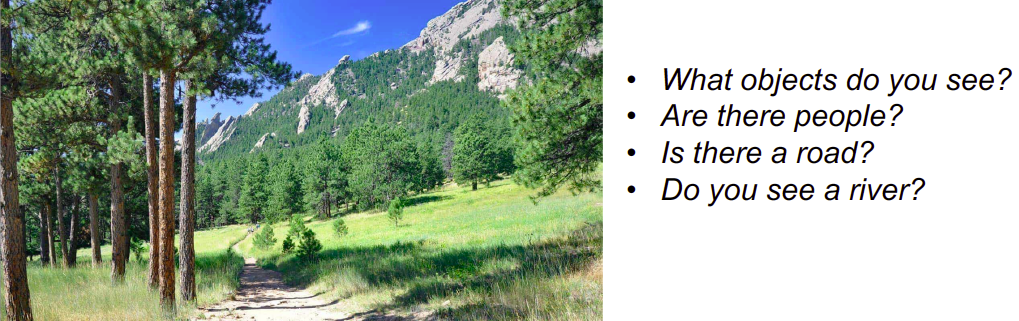

Mihalcea and her team are bringing together two fields of research — language and vision — that do not often intersect. In this project, they will merge the two disciplines to develop a system that will allow people to ask questions about the environment observed by a robot or autonomous vehicle, which in turn will enhance their ability to process this information and make decisions.

To begin, the team will construct a dataset that consists of video recordings, paired with questions about the environment, as asked by a group of human annotators. The researchers will develop and apply linguistic and visual representation algorithms to the dataset of questions to create semantic graphs that reflect a holistic representation of the data. The dataset also provides a mechanism to capture causal, spatial, and temporal information about the surroundings. The success of this approach will be evaluated using a subset of the data to measure the accuracy of the results.

“We are trying to allow for an interaction through natural language” said Mihalcea. “We are developing systems that can answer questions by leveraging visual content that is observational in nature.”

The technology the team is developing is fairly broad and may have applications in a variety of fields. Mihalcea believes it may be particularly helpful when sending autonomous vehicles into uncharted territories where no positioning information or maps are available.

“We are at the starting line of this project,” said Mihalcea. “It is exciting, because it is a project that lies at the interaction between language and vision.”

###

Mihalcea is joined by Mihai Burzo at UMich, Matt Castanier with the Ground Vehicle Service Center and Glenn Taylor with Soar Technology, Inc. on the project “In-the-wild Question Answering: Toward Natural Human-Autonomy Interaction.” The project received funding from the U.S. Army Ground Vehicle Systems Center through the Automotive Research Center at the University of Michigan.

--

Stacey W. Kish