Looking to Bats to Improve Autonomous Vehicle Vision

The future is autonomous. As companies vie for the rights to navigate urban terrains, they often use LIDAR or RADAR sensors to create real-time, three-dimensional maps so the vehicles can maneuver through the environment. These sensors are not ideal for every situation. During military operations, they rob autonomous vehicles of the stealth necessary to navigate a hostile terrain.

“These sensors [LIDAR and RADAR] are like light houses shining in the night,” said Bogdan Popa, assistant professor of Mechanical Engineering at University of Michigan and principal investigator on the project. “I gained inspiration from the natural world, where land-based animals, like bats, move around their environment using sound. I wanted to replicate this for our navigation system.”

Popa and his team began the task of creating an ultrasound sensor system that allowed autonomous vehicles to operate in an uncertain world under inclement weather conditions while retaining their anonymity. Sound waves have not been embraced for this application because the waves do not travel as efficiently through air as light waves. In fact, current ultrasound sensors have a range of only 10 meters and produce low-resolution maps.

“There are no suitable sensors or systems that can replicate what bats do in nature—send sounds in desired directions and listen for the return echo,” said Popa. “There are some systems but they consume a lot of power.”

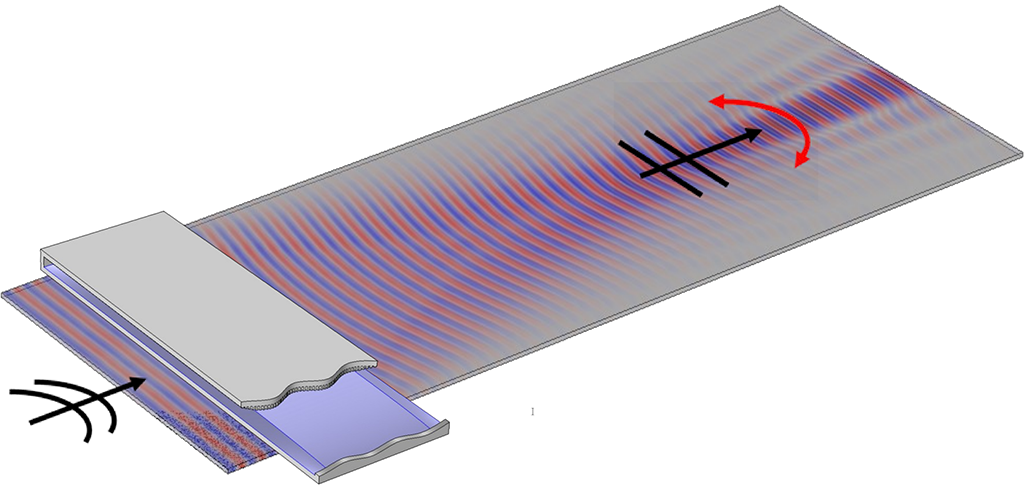

To propel the sound waves efficiently, Popa and his team created passive and active meta-materials to construct an acoustic lens. Similar to an optical lens, the acoustic lens can focus sound in one direction. Consisting of two engineered pieces of patterned plastic, the lens can focus the ultrasonic sound waves (35-45 kHz) produced by a speaker in any desired direction with only the slightest deformation. This means that the lens and speaker can be fixed to the vehicle and do not need to be cleaned or realigned during a mission. With only minor adjustments, the lens can project a focused wave in almost any direction to gather information about the surrounding environment.

With materials in hand for the lens, the team is now focused on developing algorithms to decipher the returning echo quickly and accurately. Using machine learning, they plan to create the algorithms that will allow the creation of three-dimensional maps to help autonomous vehicles navigate any terrain.

Popa believes his team’s work could also one day benefit civilian society. As more autonomous vehicles take the road, this technology could improve the safety of vehicles navigating every community, large and small and it all comes back to nature.

“Bats are used to operating in a very ‘cluttered’ environment, flying around with friends and talking at the same time to discover what is in front of them,” said Popa. “We are trying to replicate this in an efficient way. It is challenging but exciting.”

HyungSuk Kwon, a PhD candidate in Popa’s group, will present the findings of this research at the Acoustical Society of America meeting in Chicago, May 11–15.

Stacy W. Kish