Bridging the Communication Gap

“Autonomous vehicles are going to be our partners in the future,” said Joyce Chai, professor in Computer Science and Engineering at the UMich. “We are curious to see the use of human language in the context of autonomous vehicles.”

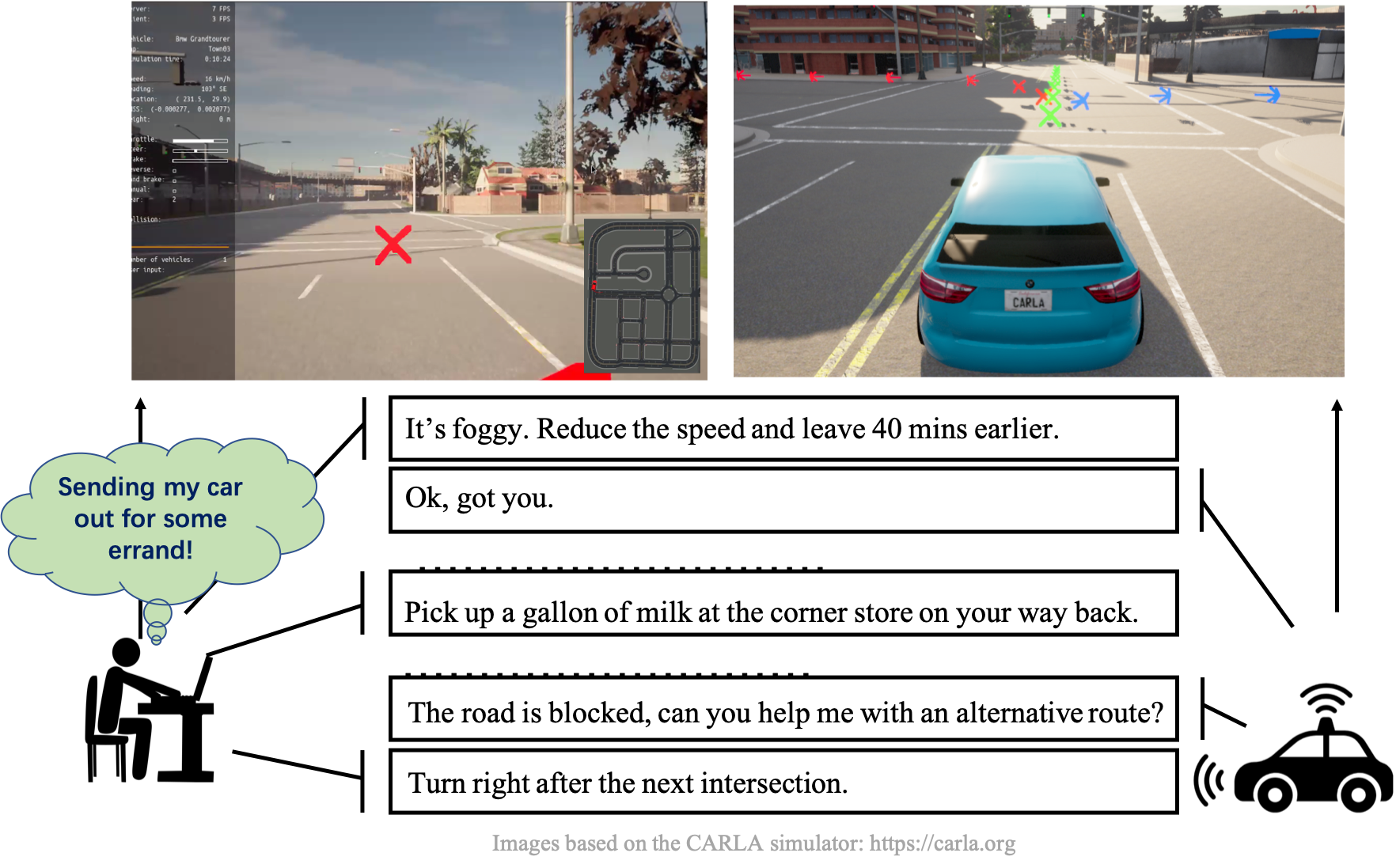

Autonomous vehicles carry out tasks independently, but unexpected events in the field can leave them adrift without the information necessary to handle these situations effectively. At these critical moments, human intervention is essential, but problem-solving requires a back-and-forth conversation.

The team is simulating different situations that an autonomous vehicle may encounter in the field. They plan to collect data on the dialogue that addresses natural communication.

Sound easy? Think again.

“It’s a chicken and egg problem,” said Chai. “The human has to use the system but there has to be a system for the human to interact with, but the system has to take into account human behaviors to operate properly.”

To side step this complication, the team plans to, er, cheat a little. They will start by using a system that is controlled by a human, the wizard in the machine. The dialog in this preliminary step allows the researchers to collect realistic data on how dialog develops organically. The team will use this data to develop models that can be used by autonomous vehicles.

The team will simulate unexpected scenarios that the vehicles may encounter, like inadequate lighting, road closures, unfavorable weather conditions, even a change of the plan in the middle of the mission. Using these simulations, the team will develop approaches that will enable humans and vehicles to jointly work through the scenario. Throughout this process, they will explore both first- and third-person perspectives in the ensuing conversations.

Chai and her team will build on this approach by developing several scenarios where an autonomous vehicle and a human operator work together to accomplish a goal, from transporting resources to performing search and rescue operations.

“The key challenge is to link natural language instructions to the vehicle’s control system,” Chai said. “We will use these utterance to create executable actions and plans for the vehicles to achieve their mission.”

The data gathered during these experiments provide another opportunity to study language and dialogue, specifically the nouns, verbs, adjectives, adverbs, and spatial terms and their grounded meanings in a highly dynamic environment. The team will experiment with different approaches that explore linguistic context, perceived environment, and the internal state of the autonomous vehicle. Ultimately, this work will produce the building blocks for language communication for the future of autonomous vehicles.

###

Chai was joined by Cristian-Paul Bara at UMich, Chris Mikulski and Gregory Hartman at the Ground Vehicle Systems Center, Susan Hill at the Army Research Lab, and Andy Dallas at Soar Automotive on the project, titled “Language Communication and Collaboration with Autonomous Vehicles Under Unexpected Situations.” The project received funding through the Ground Vehicle System Center at the University of Michigan.

Stacey W. Kish