Human-Autonomy Interaction

Annual PlanIn-the-wild Question Answering: Toward Natural Human-Autonomy Interaction

Project Team

Government

Matt Castanier, U.S. Army GVSC

Faculty

Mihai Burzo, University of Michigan

Industry

Glenn Taylor, Soar Technology, Inc.

Student

Santiago Castro, U. of Michigan

Project Summary

Project begins 2021.

Situational awareness remains a major goal for both humans and machines as they interact with complex and dynamic environments. Awareness to unfolding situations allows for rapid reactions to events that take place in the surrounding environment, and enables more informed and thus better decision making. Conversely, a lack of situational awareness is associated with decision errors, which in turn can lead to critical incidents. As we continue to make advances in the development and deployment of autonomous systems, a central question that needs to be addressed is how to equip such systems with the ability to acquire and maintain situational awareness, in ways that match and complement the human ability.

Current autonomous vehicles are able to explore large and unchartered spaces in a short amount of time, however they are not able to “report back” the information they collected in a manner that is easily accessible to human users and does not produce information overload. As an example, consider the situation of a manned vehicle followed by several autonomous vehicles. In order to maintain situational awareness for the entire fleet, the human driver in the lead vehicle needs to communicate with the autonomous vehicles in ways that are similar to human-human communication.

The main research question we are addressing is how to effectively and efficiently perform natural question answering against a large visual data stream. We are specifically targeting in-the-wild question answering, where the visual data stream is a close representation of real world settings reflecting the challenges of complex and dynamic environments.

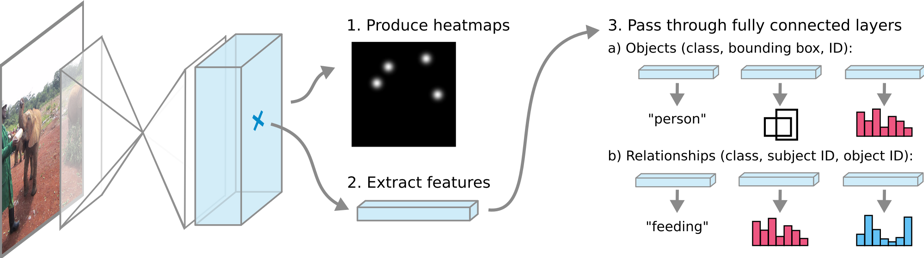

The project targets three main research objectives: (1) Construct a large dataset of video recordings paired with natural language questions that are representative for in-the-wild complex environments. This dataset will be used to both train and test in-the-wild multimodal question answering systems that can be used to enhance situational awareness. (2) Develop visual representation algorithms that convert the visual streams into semantic graphs that capture the entities in the videos as well as their relations. (3) Develop algorithms for multimodal question answering that aim to understand the type and intent of the questions, and map them against the semantic graph representations of the visual streams to identify one or more candidate answers.

Our project will make new contributions by developing novel multimodal question answering algorithms relying on semantic graph representations, and addressing real and challenging settings. Most of the algorithms that have been previously proposed for multimodal question answering have been developed and tested on data drawn from movies and TV series, which consist of acted, scripted, well-directed and heavily edited video clips that are hard to find in the real world. In contrast, our project will have to overcome the challenge of environmental noise, low lighting conditions, scenes that are less defined and not perfectly framed, and lack of subject permanence.

Publications:

- Oana Ignat, Santiago Castro, Hanwen Miao, Weiji Li, Rada Mihalcea, WhyAct: Identifying Action Reasons in Lifestyle Vlogs, Proceedings of the Empirical Methods in Natural Language Processing (EMNLP 2021), November 2021.

- Santiago Castro, Ruoyao Wang, Pingxuan Huang, Ian Stewart, Nan Liu, Jonathan Stroud, Rada Mihalcea, Fill-in-the-blank as a Challenging Video Understanding Evaluation Framework, Proceedings of the Association for Computational Linguistics (ACL 2022), Dublin, May 2022.

- Oana Ignat, Towards Human Action Understanding in Social Media Videos Using Multimodal Models, PhD Dissertation, August 2022.

- Oana Ignat, Victor Li, Santiago Castro, Rada Mihalcea, Human Action Co-occurrence in Lifestyle Vlogs with Graph Link Prediction, under submission, October 2022.

- Santiago Castro, Naihao Deng, Pingxuan Huang, Mihai Burzo, Rada Mihalcea, WildQA: In-the-Wild Video Question Answering, in Proceedings of the International Conference on Computational Linguistics (COLING 2022), October 2022.

- Santiago Castro*, Oana Ignat*, Rada Mihalcea, Scalable Performance Analysis for Vision-Language Models, submitted to the International Conference on Semantics, 2023.

* The project benefits from the contributions of Oana Ignat, who is a postdoctoral fellow.

#2.15