Vehicle Controls & Behaviors

Annual PlanA Robust Semantic-aware Perception System Using Proprioception, Geometry, and Semantics in Unstructured and Unknown Environments

Project Team

Government

Paramsothy Jayakumar, U.S. Army GVSC

Industry

Andrew Capodieci, Neya Systems

Student

Joey Wilson, U. of Michigan

Project Summary

Project duration 2021 - 2023.

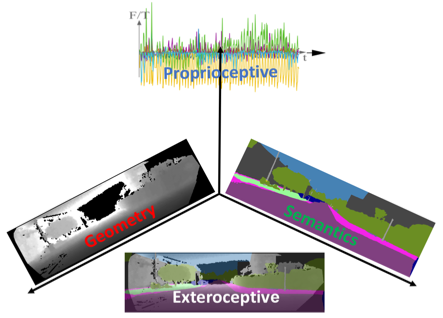

A real-time robust perception system is a precondition to achieve autonomous off-road mobility at high speed, real-world autonomy, and operation in unstructured and uncertain environments. Today we do not have such a robust perception system capable of supporting complex dynamic off-road missions. Without reliable proprioceptive dead reckoning, the vehicle can be lost and never recovered in perceptually degraded situations, e.g., completely dark, bright, uniform, or foggy scenes. Without a dense, dynamic semantic map, the vehicle’s scene understanding is not sufficient for informed decision-making. Furthermore, autonomous off-road mobility at high speed requires high-frequency and resource-constrained state estimation algorithms that work on-board. The investigators consider proprioception, geometric, and semantics as independent bases that must be considered simultaneously within perception algorithms.

An autonomous off-road vehicle cannot rely on high-definition maps and structured road networks as commercial self-driving vehicles do. Its perception capabilities dictate the behavior of an autonomous vehicle in an unknown environment. This work addresses two core necessities by developing:

- a fail-safe proprioceptive high-frequency state estimator using invariant observer design theory for dead reckoning over long trajectories (i.e., 1 km and above);

- a multilayer semantic mapping framework that models both geometry and semantics of a complex dynamic scene in 3D and runs onboard.

This work addresses the fundamental research questions of:

Q1: What is the performance limit of onboard proprioceptive observers to deal with drifts in the absence of exteroceptive measurements or perceptually degraded situations?

Q2: How to consistently incorporate 3D scene flow estimated from stereo cameras or LIDAR data into a dense semantic map in real-time?

Presentations:

- IEEE ICRA 2022 Workshop: ROBOTIC PERCEPTION AND MAPPING: EMERGING TECHNIQUES

https://sites.google.com/view/ropm/

Software:

- 2021: https://github.com/UMich-CURLY/BKIDynamicSemanticMapping

- 2022: https://github.com/UMich-CURLY/husky_inekf

- 2023: https://github.com/UMich-CURLY/NeuralBKI

- 2024: https://github.com/UMich-CURLY/3DMapping

- 2024: https://github.com/UMich-CURLY/BKI_ROS

#1.36

Publications:

Wilson, J., Fu, Y., Friesen, J., Ewen, P., Capodieci, A., Jayakumar, P., … & Ghaffari, M. (2024). Convbki: Real-time probabilistic semantic mapping network with quantifiable uncertainty. IEEE Transactions on Robotics.

Wilson, J., Fu, Y., Zhang, A., Song, J., Capodieci, A., Jayakumar, P., … & Ghaffari, M. (2023, May). Convolutional bayesian kernel inference for 3d semantic mapping. In 2023 IEEE International Conference on Robotics and Automation (ICRA) (pp. 8364-8370). IEEE.

Wilson, J., Song, J., Fu, Y., Zhang, A., Capodieci, A., Jayakumar, P., … & Ghaffari, M. (2022). MotionSC: Data set and network for real-time semantic mapping in dynamic environments. IEEE Robotics and Automation Letters, 7(3), 8439-8446.

Unnikrishnan, A., Wilson, J., Gan, L., Capodieci, A., Jayakumar, P., Barton, K., & Ghaffari, M. (2022). Dynamic semantic occupancy mapping using 3D scene flow and closed-form Bayesian inference. IEEE Access, 10, 97954-97970.